Janine Jackson interviewed the Center on Privacy and Technology’s Clare Garvie about facial recognition rules for the June 26, 2020, episode of CounterSpin. This is a lightly edited transcript.

Center on Privacy & Technology (5/16/19)

Janine Jackson: Robert Williams, an African-American man in Detroit, was falsely arrested when an algorithm declared his face a match with security footage of a watch store robbery. Boston City Council voted this week to ban the city’s use of facial recognition technology, part of an effort to move resources from law enforcement to community, but also out of concern about dangerous mistakes like that in Williams’ case, along with questions about what the technology means for privacy and free speech. As more and more people go out in the streets and protest, what should we know about this powerful tool, and the rules—or lack thereof—governing its use?

Clare Garvie is a senior associate with the Center on Privacy and Technology at Georgetown Law, lead author of a series of reports on facial recognition, including last year’s America Under Watch: Face Surveillance in the United States. She joins us now by phone. Welcome to CounterSpin, Clare Garvie.

Clare Garvie: Thank you so much for having me on.

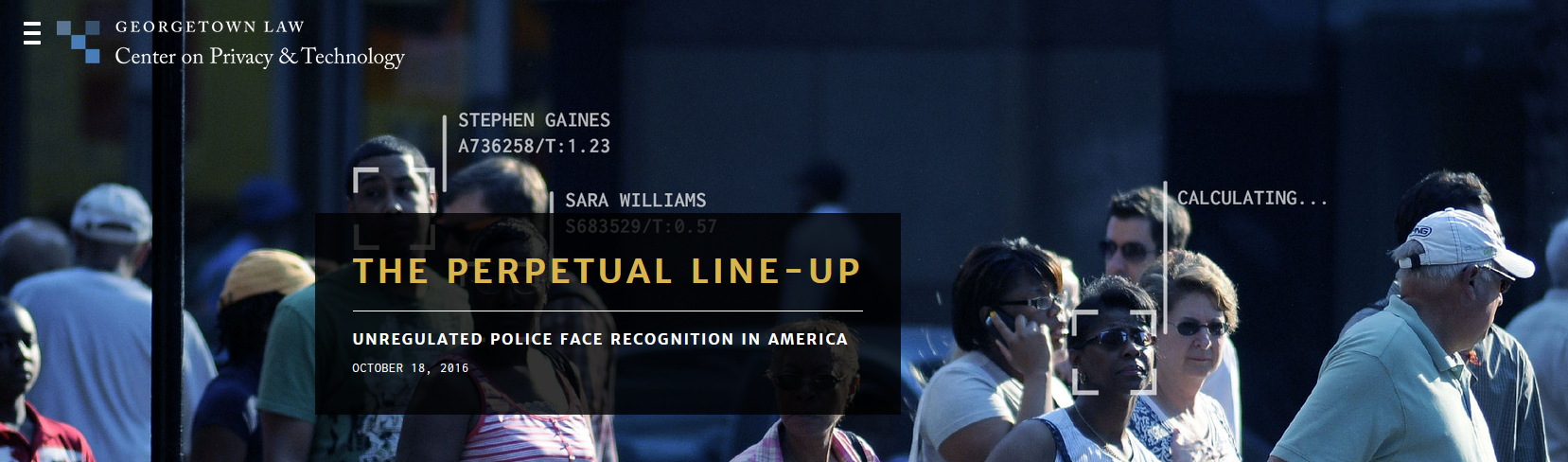

Center on Privacy & Technology (10/18/16)

JJ: I would like to ask, first, for a sense of the prevalence of face recognition technology, and who is affected. People might imagine that it’s a tool, like fingerprinting, that police sometimes use to catch criminals. But then I read in the Center’s earlier report, evocatively titled The Perpetual Line-Up, that one in two American adults is in a law enforcement face recognition network. How can that be? What does that mean?

CG: That’s right. Face recognition use by police in the United States is very, very common. Over half of all American adults are in a database that’s used for criminal investigations, thanks to getting a driver’s license. Robert Williams was not identified through a former mugshot; he was identified through his driver’s license, which most of us have. In addition, we estimate conservatively that over a quarter of all 18,000 law enforcement agencies across the country have access to use face recognition. The most concerning feature is that there are few if any rules governing how this technology can or, more importantly, cannot be used.

JJ: When you say Williams was identified through a driver’s license—we think of someone going through mugshots, a crime has been committed and you go through mugshots to see if you can find someone. But this is really, I mean, we really all are in a line-up, potentially, all the time, if police are using databases of things like driver’s licenses to match with.

CG: That’s right. Generally speaking, if you haven’t committed a crime or had interaction with law enforcement, you’re not in a fingerprint database that’s searched on a routine basis in criminal investigations. You’re certainly not in a DNA database that’s searched for criminal investigations. And yet, thanks to the development of face recognition technology, and the prevalence of face photographs on file in government databases, chances are better than not, you are in a face recognition database that is searched by the FBI or your state or local police, or accessible to them for investigations of any number of types of crimes.

JJ: And to say that the technology and its use are not perfect–I mean, law enforcement can search for matches based on a pencil drawing, or based on a picture of a celebrity, or a photoshopped picture. I found that very interesting. I wonder if you could talk a little bit about so-called “probe” photos. It’s very odd.

CG: So face recognition, very simply, is the ability of law enforcement, or whoever else has a system, to take a photo or a sketch or something else, depicting an unknown individual, and compare it against the database of known individuals–typically mug shots, but also driver’s licenses.

In many jurisdictions, we have found that those probe photos are not limited to photographs. They mostly are–and those are from social media, those are from cell phone photos or videos, those can be from surveillance cameras. But in some jurisdictions, those are also forensic sketches, artists’ renderings of what a witness describes a person looking like, or forensic sculpture created by a lab. Or, in the instance of the NYPD in at least two cases, officers used what they call celebrity lookalikes—somebody, a celebrity who they thought the suspect looked like, to search for the identity of the suspect.

This will fail. Biometrics are unique to an individual. You can’t substitute someone else’s biometrics for your own; that just goes against the rules of biometrics. You also can’t put in a sketch of a biometric. A sketch of a fingerprint sounds ridiculous. You can’t put a sketch of face in and expect to get a reliable result. And yet, despite this, companies themselves, who are selling this tool, do advocate, in some instances, for the use of this type of probe photo of sketches. They say that that is a permissible use of their technology, despite the fact that it will overwhelmingly fail.

JJ: And the technology being especially bad for black people. That’s not just anecdote; there’s something very real there as well.

CG: Right. Studies of face recognition accuracy continue to show that the technology performs differently depending on what you look like, depending on your race, sex and age, with many algorithms having a particularly tough time with darker skin tones. Pair that with the fact that face recognition will be disproportionately deployed on communities of color. And if it’s running on mugshot databases, face recognition systems will disproportionately be running on databases of, particularly, young black men.

In San Diego, for example, a study of how the city used license plate readers and face recognition found that the city deployed those tools up to two and a half times more on communities of color than the population of San Diego, showing that these tools are focused on precisely the people that they will probably perform the least accurately on.

JJ: The power is obvious of this tool, and the potential for misuse, so what about accountability? You started to say, how would you describe the state—at the federal or local level, or wherever—the state of laws or regulations or guidelines around the use of face recognition?

CG: The laws have not kept up with the deployment of face recognition. As it stands now, a handful of jurisdictions have passed bans on the use of the technology, most recently yesterday in Boston; that was following San Francisco, Oakland, and a couple of other jurisdictions in California and Massachusetts.

But for the vast majority of the country, there are no laws that comprehensively regulate how this technology can and cannot be used. And, as a consequence, it’s up to police departments to make those determinations, often with a complete absence of transparency or input from the communities that they are policing.

JJ: Finally, let’s talk about the story of the day. We’ve read about the FBI combing through the social media of protesters, and charging them under the Anti-Riot Act. The FBI also flying a Cessna Citation, a highly advanced spy plane, with infrared thermal imaging, flying that over Black Lives Matters protests. Where does this surveillance technology intersect with the right to protest? What are the conflicts that you see there?

Clare Garvie: “It’s particularly critical, in a moment where we are protesting police brutality and over-surveillance and the over-militarization of police, to take into account how advanced technologies like face recognition play into historical injustices and over-surveilling of communities of color.”

CG: Face recognition risks chilling our ability to participate in free speech, free assembly and protest. Police departments themselves acknowledge that; back in 2011, there was a Privacy Impact Assessment, written by a bunch of various law enforcement agencies, that said face recognition, particularly used on driver’s license photos, has the ability to chill speech, cause people to alter their behavior in public, leading to self-censorship and inhibition, basically preventing people from participating, or exercising their First Amendment rights.

Face recognition is a tool of biometric surveillance. And if it’s used on protests, it will chill people’s right to participate in that type of behavior. It’s particularly critical, in a moment where we are protesting police brutality and over-surveillance and the over-militarization of police, to take into account how advanced technologies like face recognition play into historical injustices and over-surveilling of communities of color. Face recognition and other advanced technologies must be part of the discussion around scaling back where law enforcement agencies are systems of oppression and of marginalization.

JJ: How can we protect ourselves and one another? We do want to keep going out in the street, but what, maybe, should we be mindful of?

CG: We should be mindful that any photograph or video taken at a protest and published, put online, can be used to identify the people who are caught on camera. So I urge anyone taking photos and videos to keep taking those photos and videos, but train the photos on police, train the videos, train your cameras on the police. To the extent possible blur faces, especially if you think you’re in a jurisdiction that will use face recognition to identify and then go after protesters. Help us keep the anonymity of these protesters, in a world where face recognition does make any photograph into a potential identification tool. It’s really important for all of us to be aware of that.

Now, it shouldn’t be this way. We should have rules that protect us. We don’t, at the moment, so we have to be proactive in protecting the identities of the people that show up on the other side of our camera.

JJ: We’ve been speaking with Clare Garvie, senior associate with the Center on Privacy and Technology at Georgetown Law. You can find them online at lawgeorgetown.edu. Clara Garvie, thank you so much for joining us this week on CounterSpin.

CG: Thank you for having me on.

PrintCounterSpin | Radio Free (2020-07-02T16:11:56+00:00) ‘Face Recognition Risks Chilling Our Ability to Participate in Free Speech’ – CounterSpin interview with Clare Garvie on facial recognition rules. Retrieved from https://www.radiofree.org/2020/07/02/face-recognition-risks-chilling-our-ability-to-participate-in-free-speech-counterspin-interview-with-clare-garvie-on-facial-recognition-rules/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.