Note: I am not attacking people personally, or their right to opinions. However, I have been in this rodeo since 1983, academia, with the deans, chairs, provosts, VPs and presidents, and plethora of others, who hands down (not all) got it wrong about the value of and reason for education, K12 and higher ed. This is my subtitle:

another echo chamber of academics yammering and never getting deeper with AI’s bad boy/bad girl history [plus the provost of University of Florida, the “first AI university” sponsored the talk]

Can you imagine this Provost of the University of Florida is all happy about and rah-rah for “his” school becoming the first University of Artificial Intelligence, AI?

I’ve been on these webinars before, and I have been in academia for more than three decades, but as a part-time rabble rouser, not in the star chamber of tenure, emeritus professorship or admin class.

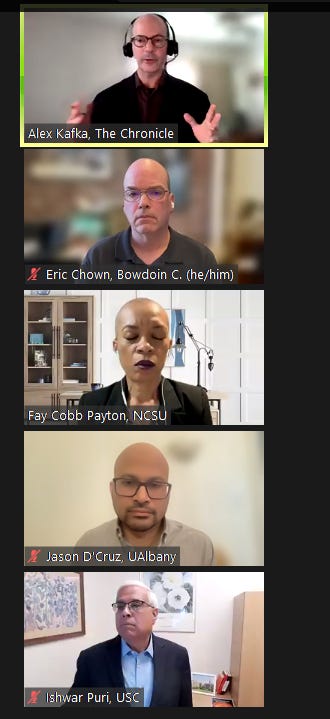

Below, Ishwar, VP of research of USC, talks about how great AI is, but that it can’t predict (now) a black swan event like 9/11. Not yet, but soon. Whew. He is a proponent of AI as just another tool, a fork to scoop up steak and a spoon to slurp up soup.

These people are not really on the edge, not asking to be inside the real debate arena, alas, as they are stuck in academia, and they are not outside the box thinkers, since they believe in academics being neutral and good guys, and that marketing and monied interests also are in it for good deeds.

The Non-Profit Edifice Complex is a massive front operation to steer money and power into the hands of the corporate fintech elite, while relegating ordinary people into human data capital commodity outputs for the purpose of tyrannical impact investing and authoritative social engineering.

I highly recommend Wrench in the Gears and Silicon Icarus, as well as Algorithm Watch. For now, watch: Alison McDowell- 4th Industrial Revolution/ Listen in as Alison talks about the evolution of technology.

Then this guy, Puri, is looking at the “density of consumption/energy” — that we are going from ICE to EV, Internal Combustion Engines, versus Electric Vehicles, and which one is more dense, the energy that is, how much used, what’s given off as wasted energy (embedded and lifecycle analysis were not discussed) and pollution consequences. (First law of thermodynamics, man …. The first law of thermodynamics states that energy can neither be created nor destroyed, only altered in form. For any system, energy transfer is associated with mass crossing the control boundary, external work, or heat transfer across the boundary. These produce a change of stored energy within the control volume!)

Again, there is no discussion around or about the Fourth Industrial Revolution, no clue about how bad it’s going to be with the Internet of Bodies; no critique oft the World Economic Forum, of Davos, of the entire cabal of finance tech gurus who are making HUGE casino capital money on data trolling, data sharing with alogrithms, with powering up AI to predict who is and who isn’t, who were are and what we should be or not be, that is the question:

- who follows the party line

- who follows the medical line

- who follows the parenting line

- who follows the employee line

- who follows the capitalist line

- who believes in X, y, Z in Capitalism and USA and the great power of the elite

…. the who believes and who follows and who does, also is the reverse, as in WHO DOESN’T do, believe, see, hear, follow, lock-step adhere to.

I posed long questions, and alas, they did not field them. Accordingly, they did not field them completely or with rigour, that is, or maybe just partially they dealt with partial points of contention I broached. I tried to copy the questions I put into the Q & A Zoom room, before the Zoom session was over, but that feature isn’t yet available.

My major premise is that the entire project of K12 and higher education is predicated on the wrong things, and compliance is one of those values, as is forcing youth to follow dictates as another place holder of follow the leader crap; and then, what is college but a venue for financiers and monied interests, with these business schools and all the engineering programs added to universities, all antithetical to liberal eduacation, that is arts.

Drawing on Plato and Malcom X, West said the death process is part of real education — paideia — a concept developed by Socrates that means deep, critical thinking.

It is the antithesis of contemporary culture: “The problem in American society is we are a culture of death-denying, death-dodging… a joyless culture where pleasure-seeking replaces what it means to be human.” (source)

It’s as if these panelists are just conduits for/by/with/because of the tools, the materials, the curriculum drivers, and they have hands down stated that AI is here to stay, and whatever comes is there to stay, no matter how “advanced,” so just teach students how to use it and hope for good energy and karma, be damned.

You know, that argument that “old Einstein did not make the atomic bomb so don’t blame him,” but “some of his work and others’ work helped produce it theoretically, yes, however, it’s not his fault.” Here’s my example of taking responsibility with the science, the quest for splittng the atmo.

He came to regret taking even this step. In an interview with Newsweek magazine, he said that “had I known that the Germans would not succeed in developing an atomic bomb, I would have done nothing.” (source)

The old/young/yet to be birthed cat’s out of the bag. I did not expect any of the panelists (forget about the college provost who is a rabid dog of capitalism) to have any exposure to revolutionary and rebellious voices:

Alison McDowell continued: “The same thing is being done for natural capital via NFTs or other forms of tokenization. Yesterday, the Bank of International Settlement was presenting on their Genesis Project – green bonds with smart contracts for carbon offsets. All of this requires real time data collection. That impact data can then be repurposed via Ocean Protocol and Singularitynet.io to train AI. We are building the Singularity with the impact data.”

[Source]

Here, from the Harvard Business Reivew: “AI Regulation Is Coming: How to prepare for the inevitable” by François Candelon, Rodolphe Charme di Carlo, Midas De Bondt, and Theodoros Evgeniou. From the September–October 2021 issue of the magazine.

Notice there is no discussion about getting the public, the people, the students, the workers, the small business owners, the parents, the old and weak, all of us, in on the discussion, in that big tent, but that’s the dirty me=myself=I=CEO reality of Harvard.

This article explains the moves regulators are most likely to make and the three main challenges businesses need to consider as they adopt and integrate AI. The first is ensuring fairness. That requires evaluating the impact of AI outcomes on people’s lives, whether decisions are mechanical or subjective, and how equitably the AI operates across varying markets. The second is transparency. Regulators are very likely to require firms to explain how the software makes decisions, but that often isn’t easy to unwind. The third is figuring out how to manage algorithms that learn and adapt; while they may be more accurate, they also can evolve in a dangerous or discriminatory way.

Though AI offers businesses great value, it also increases their strategic risk. Companies need to take an active role in writing the rulebook for algorithms

Ahh, regulating and putting a stop to it (AI) and what about studying intended, unintended, and Higher Order of Thinking around that precautionary principle and do no harm aspect of screening anything before it is deployed? Nah.

Finally, the building blocks of AI regulation are already looming in the form of rules like the European Union’s General Data Protection Regulation, which will take effect next year. The UK government’s independent review’s recommendations are also likely to become government policy. This means that we could see a regime established where firms within the same sector share data with each other under prescribed governance structures in an effort to curb the monopolies big tech companies currently enjoy on consumer information.

The latter characterises the threat facing the AI industry: the prospect of lawmakers making bold decisions that alter the trajectory of innovation. This is not an exaggeration. It is worth reading recent work by the RSA and Jeremy Corbyn’s speech [another speech about broadband] at the Labour Party Conference, which argued for “publicly managing” these technologies. (source)

We can continue as we know the ethical discussion around ANYTHING can also be a white wash of the problem:

The quest for ethical AI also could erode the public sector’s own regulatory capacity. For example, after trying to weigh in with a High-Level Expert Group on AI, the European Commission has met with widespread criticism, suggesting that it may have undermined its own credibility to lead on the issue. Even one of the group’s own members, Thomas Metzinger of the University of Mainz, has dismissed its final proposed guidelines as an example of “ethical white-washing.” Similarly, the advocacy group AlgorithmWatch has questioned whether “trustworthy AI” should even be an objective, given that it is unclear who – or what – should be authorized to define such things. (source)

Now now, we have some deep issues to discuss, but the Chronicle of Higher Education in these webinars just can’t get there because they fear outliers, people who are not even in their own camp across the board.

Finally, the document fails to highlight what is truly special about trust-based relations and a trust-based society. While it emphasizes transparency both at the level of fundamental principles and in practices, there seems to be no realization that transparency may not be at the centre of trust-based relations. Indeed, one can argue that one of the distinguishing characteristics of placing trust in others is precisely the willingness to rely on a third party without the ability, or even the need, to check what the other party does. This is not to say that transparency is useless in a trust-based society. But transparency appears to play a complementary role: while most people use AI because they trust it, few people are expected to be inquisitive if there is trust.

On the definition of AI: the definition of AI describes AI as a system that acts in the physical or digital world. But many potential software applications that these guidelines seem to intend to address are not artificial agents. For example, statistical models that provide assistance to human decision makers, without substituting them, do not act in the physical or digital world. Is the guideline not intended to address the concerns raised by those models? Or if so, should the definition of AI be revised?

The above is from Algorithm Watch (imagine that, old folks, something your granfparents would have never imagined):

“Trustworthy AI’ is not an appropriate framework — AlgorithmWatch and members of the ELSI Task Force of the Swiss National Research Programme 75 on Big Data comment on the EU HLEG on AI’s Draft Ethics Guidelines”

And, back to the top = with support from the University of Florida this webinar was advanced. That says it all, no, or says something, right?

I posed the idea that a college, like this AI UoF will not be employing faculty who design courses around the idea that AI not only should be regulated, but should possibly have many stops put on it. Nope, no classes on just what do AI and drones do for humanity, when we have 1/3 of people in the world making $2 a day or less. All those deaths by 10,000 capitalism cuts.

What coursework and research would come out of an AI school? And this provost wants all disciplines to be fully embedded in AI technology, and definitely he doesn’t want protestations from people who are not just in the arts but in other departments who see this sinister aspect of big data and all data fed into an AI super computer to get more and more transhumanist like, but futurists who might see this AI order of things is about cloning and replicating what it is to be a human, and how to clone like a human, think like a human, and then, produce the delivery systems of the oligarchs and despots of money and capitalism ways to indeed have more and more control over mind, matter, substance and soul.

That is the issue, too, how this society, many who are deemed rightfully so, freaks of humanity, want to control nature, the weather, cultures, thinking, people’s actions, beliefs, ideology, their own hope and dreams.

They want all the types of sleepers and defecators and urinators and consumers and writers and creatives and misanthropes and all of us on all those various “spectrums” to be analyzed, retrofitted, controlled by massive human computer brain, globally and in the noosphere. Data collection is more than just scooping up data. They want twinning and cloning.

Now, some medical student using facial recognition AI to see or determine if someone is about to have a stroke might be in it for the Florence Nightingale aspect of medicine, but that’s not the point. You, they, have to think beyond their silos.

[AI surveillance rumors: gay adult content creators face sanctions

by Josephine Lulamae Early last month, many gay fetish accounts were charged for distributing online porn to minors, a criminal offense in Germany. Many suspect an automated tool, but no one knows for sure.]

{In Germany, a daycare allocation algorithm is separating siblings by Josephine Lulamae To allocate this year’s limited number of daycare slots, the city of Münster used an algorithm with a known limitation: it did not direct siblings to the same school. Parents were not pleased.}

[AI and the Challenge of Sustainability: The SUSTAIN Magazine’s new edition

In the second issue of our SUSTAIN Magazine, we show what prevents AI systems from being sustainable and how to find better solutions. ]

Go to Silicon Icarus (dot) org and Wrenchinthegears (dot) com for a million lifetime hours of research on the next next of AI-AR-VR-Global Brain:

This content originally appeared on Dissident Voice and was authored by Paul Haeder.

Paul Haeder | Radio Free (2023-04-15T13:11:33+00:00) Listening to the Chronicle of Higher Ed. Retrieved from https://www.radiofree.org/2023/04/15/listening-to-the-chronicle-of-higher-ed/

Please log in to upload a file.

There are no updates yet.

Click the Upload button above to add an update.